Support for idempotent writes to Delta tables to enable fault-tolerant retry of Delta table writing jobs without writing the data multiple times to the table. In the spirit of performance optimizations, Delta Lake 2.0.0 also includes these additional features: For more information, see the documentation for details. Note, dynamic partition overwrite conflicts with the option replaceWhere for partitioned tables. INSERT OVERWRITE TABLE default.people10m SELECT * FROM morePeople Audit trail table: Capture the change data feed as a Delta table provides perpetual storage and efficient query capability to see all changes over time, including when deletes occur and what updates were made.Ĭopy SET = dynamic. Transmit changes: Send a change data feed to downstream systems such as Kafka or RDBMS that can use the feed to process later stages of data pipelines incrementally. This is especially apparent when following MERGE, UPDATE, or DELETE operations accelerating and simplifying ETL operations. Silver and Gold tables: When you want to improve Delta Lake performance by streaming row-level changes for up-to-date silver and gold tables. **So when should you enable Change Data Feed? **The following use cases should drive when you enable the change data feed. In addition, only changes made after you enable the change data feed are recorded past changes to a table are not captured. New table: Set the table property delta.enableChangeDataFeed = true in the CREATE TABLE command.Ĭopy set .defaults.enableChangeDataFeed = true Īn important thing to remember is once you enable the change data feed option for a table, you can no longer write to the table using Delta Lake 1.2.1 or below. To enable CDF, you must explicitly use one of the following methods: This improves your data pipeline performance and simplifies its operations. With Change Data Feed (CDF), you can now read a Delta table’s change feed at the row level rather than the entire table to capture and manage changes for up-to-date silver and gold tables. However, this requires scanning and reading the entire table, creating significant overhead that can slow performance.

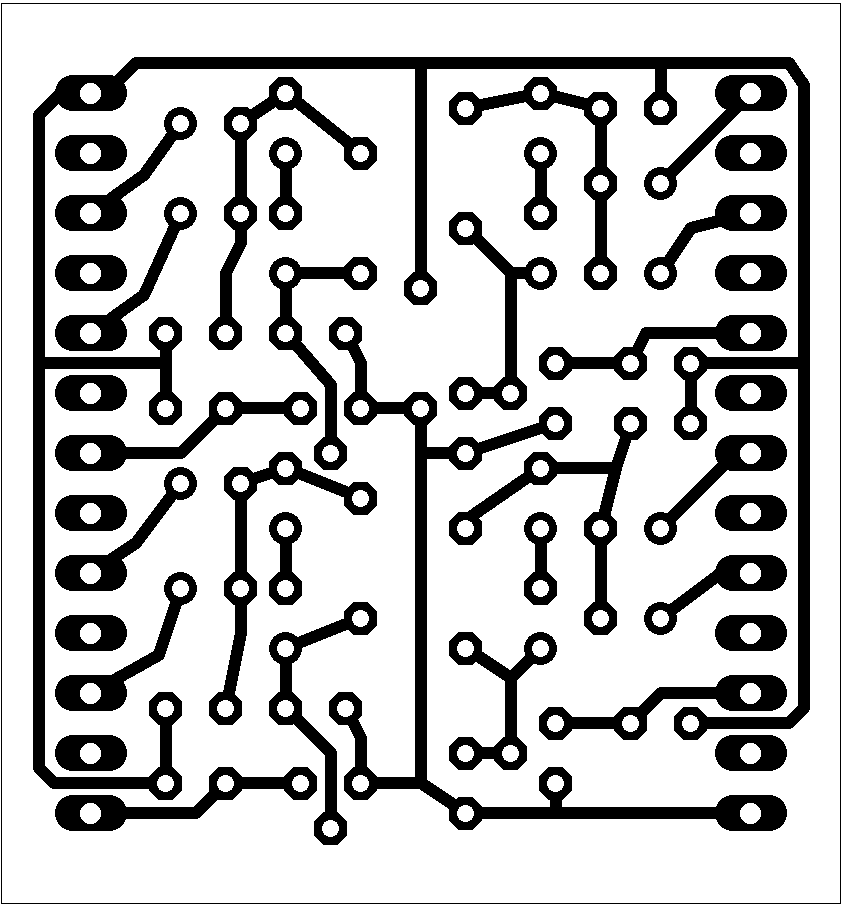

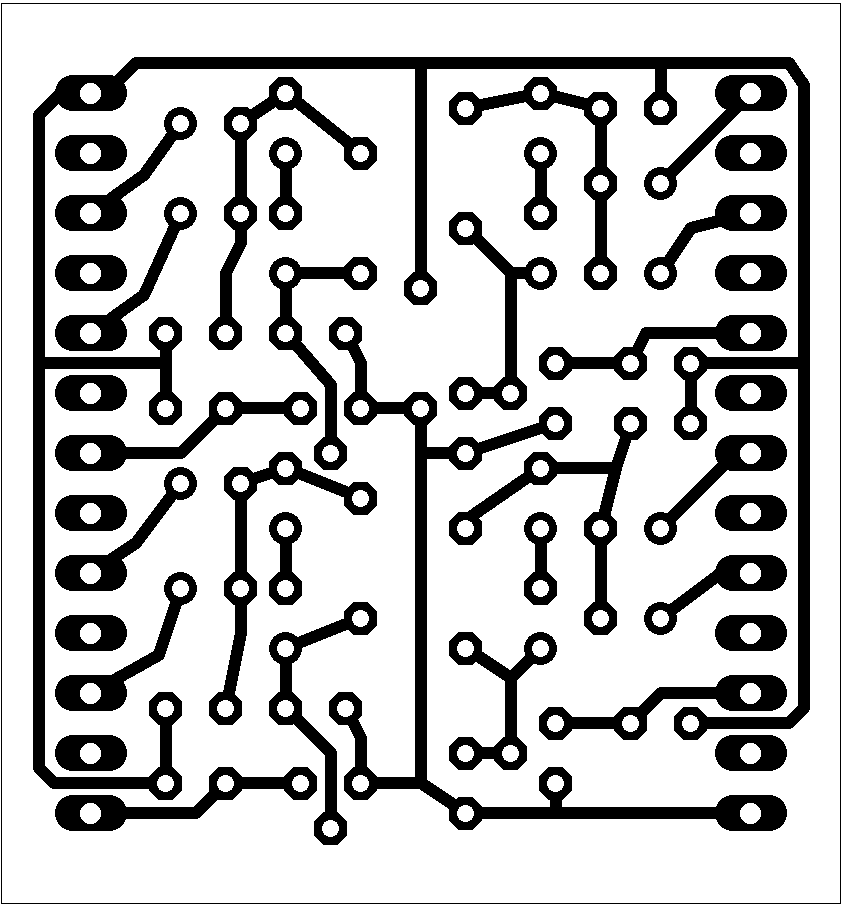

One of the biggest value propositions of Delta Lake is its ability to maintain data reliability in the face of changing records brought on by data streams. See the documentation and this blog for more details. This data clustering allows column stats to be more effective in skipping data based on filters in a query. Regular sorting of data by primary and secondary columns (left) and 2-dimensional Z-order data clustering for two columns (right).īut with Z-order, its space-filling curve provides better multi-column data clustering. To improve this process, as part of the Delta Lake 1.2 release, we included support for data skipping by utilizing the Delta table’s column statistics.įor example, when running the following query, you do not want to unnecessarily read files outside of the year or uid ranges.

This can be inefficient, especially if you only need a smaller subset of data. When querying any table from HDFS or cloud object storage, by default, your query engine will scan all of the files that make up your table. Support for data skipping via column statistics With data skipping via column statistics and Z-Order, the data can be clustered by the most common filters used in queries - sorting the table to skip irrelevant data, which can dramatically increase query performance. As a result, the matching data is often buried in a large table, requiring Delta Lake to read a significant amount of data.

When exploring or slicing data using dashboards, data practitioners will often run queries with a specific filter in place.

0 kommentar(er)

0 kommentar(er)